Two way SSL authentication

In this post let's explore in detail how the two way SSL authentication works which is primarily used in application to application communication.

To understand the secure communication between a Browser and Server read this post

Some basics:

Encryption: It's is the process of converting plain, readable data (plaintext) into an unreadable form (ciphertext) using an algorithm and a key.

Decryption: It's the process of converting encrypted data (ciphertext) back into its original, readable form (plaintext) using a decryption algorithm and a key.

Symmetric Key Encryption: In symmetric key encryption, there's only a single key and the same is used for both encryption and decryption.

Asymmetric Key Encryption: Also known as public-key encryption, asymmetric key encryption uses a pair of keys: a public key and a private key. The public key is used for encryption, while the private key is used for decryption. The data encrypted by a public key could only be decrypted by it's corresponding private key and vice versa.

Certificate Authority (CA): A trusted entity that issues digital certificates used to verify the identity of individuals, organizations, or servers in a networked environment. E.g. Verisign

Certificate Signing Request: A Certificate Signing Request (CSR) is a message sent to a CA by applicant to get a Digital Certificate issued. The CSR contains information that will be included in the certificate, such as the applicant's public key, organization name, and domain name

Digial Certificate: CA issues a digital certificate containing the Entity's (applicant’s) public key and the CA’s signature

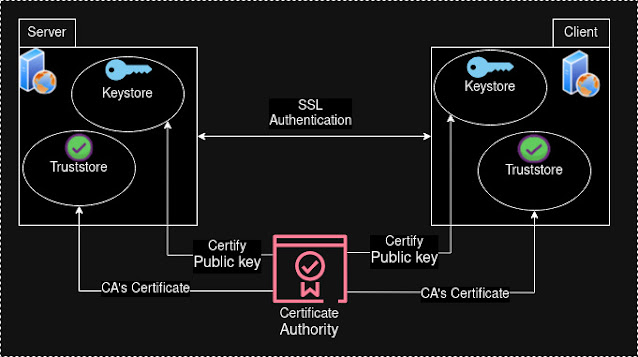

Keystore: A storage mechanism used to store and manage cryptographic keys and Certificates. E.g. JKS -Used in Java applications to store private keys and certificates.

Truststore: A truststore is a repository that contains a collection of trusted certificates. It is used to store and manage the certificates of external entities that are trusted by a system or application. These entities could be other servers, clients, or Certificate Authorities (CAs). The primary purpose of a truststore is to verify the identity of these external entities during secure communications

Setting up:

- Generate a keypair each for both Server and Client using tools such as keytool or openssl

- Generate Certificate Signing Request (CSR) for both Server and Client keys

- Submit the CSR to a CA. Get it verified and a Digital Certificate issued

- Install private keys of corresponding entities in its keystore. For example, server's private key gets installed in Server's keystore

- Install the Digital Certificate issued by the CA in the corresponding entities keystore.

- Install the CAs own Certificate in both the Server and Client's Truststore

How authentication works:

- When establishing connection (authentication) both Server and Client exchanges its Digital Certificates.

- Both entities verifies if the other entity's Certificate is signed by (issued by) a CA which it trusts, meaning it has the corresponding CA's Certificate installed in it's Truststore

- With the SSL/TLS handshake completed, both the client and server use the session keys generated during the key exchange to encrypt and decrypt the data transmitted between them, ensuring secure communication